The term DevOps stands for development and operations. DevOps is a movement that focuses on collaboration between developers and operations, empathy for the customer, and infrastructure automation. In traditional models, developers write code and then hand it to operations to deploy and run in a production environment. This often leads to a lack of ownership between the two teams, as well as a slower pace of development, because agility clashes with risk management. In contrast, with a DevOps model, the two teams work together at each stage of software delivery toward common, customer-facing goals. Developers take ownership of their code, from code through production, and operations teams build tooling and processes that help developers leverage automation to build, test, and ship code faster and more reliably.

By breaking through walls in culture and processes, development happens more efficiently. And with the customer experience in mind from beginning to end, a DevOps approach ultimately results in a better product and happier, more empowered teams while delivering more value to customers and the business.

Following is the checklist to keep in mind while choosing the right DevOps tools.

Step 1: Collaboration

Step 1: Collaboration

The waterfall approach of planning out all the work and every single dependency for a release runs counter to DevOps. Instead, with an agile approach, you can reduce risk and improve visibility by building and testing software in smaller chunks and iterating on customer feedback. That’s why many teams, including our own, plan and release in sprints of around two to four weeks.

As your team is sharing ideas and planning at the start of each sprint, take into account feedback from the previous sprint’s retrospective so the team and your services are continuously improving. Tools can help you centralize learnings and ideas into a plan. To kick off the brainstorming process, you can identify your target personas, and map customer research data, feature requests, and bug reports to each of them. We like to use physical or digital sticky notes during this process to make it easier to group together common themes and construct user stories for the backlog. By mapping user stories back to specific personas, we can place more of an emphasis on the customer value delivered. After the ideation phase, we organize and prioritize the tasks within a tool that tracks project burndown and daily sprint progress.

Top tools we can use : Active Collab, Pivotal Tracker, VersionOne, Jira, Trello, StoriesOnBoard

Step 2: Use tools to capture every request

No changes should be implemented outside the DevOps process. All types of requests for any changes in the software or any new additions to the software should be captured by the DevOps. It provides automation to the system to accept requests for change that may inflow from both the sides either the business or the DevOps team. For instance, making changes to the software to facilitate a request to improve the access to the database.

Step 3: Usage of Agile Kanban project management

A primary advantage that Kanban has is that it encourages teams to focus on improving flow in the system. As teams adopt Kanban, they become good at continuously delivering work they have completed. Thus, Kanban facilitates doing incremental product releases with small chunks of new functionality or defect fixes. This feature of Kanban makes it very suited to DevOps’ continuous delivery and deployment requirements.

The other big advantage of Kanban is how it enables you to visualize your entire value-stream and ensure stable flow. Thus, it helps you combine the workflows of different functions and activities right from Dev to Integration/ Build, Test, Deployment and beyond that to application monitoring. Initially, it will help your Dev and Ops people to work in a collaborative manner. Over a period of time, you can evolve into a single team and single workflow that includes all of Dev and Ops activities. Kanban provides you visibility to this entire process – and transformation to a DevOps culture.

Step 4: Usage of tools to Log Metrics

One should always opt for the tools that help in understanding the productivity of the DevOps processes both in automated and in manual processes. From this one can determine if it is working in the favor or not. First decide which metrics are more relevant to the DevOps processes, like speed to effective action vs. errors occurred. Secondly, use an automated process to rectify issues without the help of a human controller. For instance, dealing with problems related to scaling of software automatically on digital cloud podium.

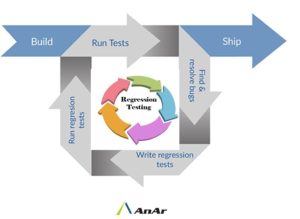

Step 5: Implementation

Automated testing is just a minor part of automated testing. Test automation is an ability to implement code and data and the solution thus obtained to ensure its high quality. A continuous series of test is must do with DevOps.

Step 6: Acceptance tests

It is necessary to perform Acceptance tests as they help in a deployment of each part thereby acceptance of the infrastructure. The testing process should also define the degree of acceptance tests that are to be a part of apps, data and test suite. A good amount of time must be spent in testing and retesting of DevOps and define acceptance tests to ensure that the tests are in sync with the criteria selected. As applications evolve over time, new instructions are fed and again the testing should be done.

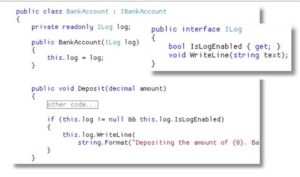

Automated Testing

Automated testing pays off over time by speeding up your development and testing cycles in the long run. And in a DevOps environment, it’s important for another reason: awareness.

To prepare for and support what Development builds, it’s important for Operations to have visibility into what is being tested, and how thoroughly. Unlike manual tests, automated tests are executed faithfully and with the same rigor every time. They also yield reports and trend graphs that help identify risky areas.

Risk is a fact of life in software, but you can’t mitigate what you can’t anticipate. Do your operations team a favor and let them peek under the hood with you. Look for tools that support wallboards and let everyone involved in the project comment on specific build or deployment results. Extra points for tools that make it easy to get Operations involved in blitz testing and exploratory testing.

Tools we can use: Bamboo, Bitbucket, Capture for Jira

Step 7: Continuous Feedback

Continuous feedback focuses on providing ongoing feedback and coaching by openly discussing an employee’s strengths and weaknesses on a regular basis. Feedback is of utmost importance to spot gaps and inefficiencies’ in the app. Feedback loops are of great help for automated conversation among the tests. The right tool should be able to spot any issue using manual or automated mechanisms. A collaborative approach towards solving the problem should be adopted for achieving impeccable results.

Release dashboards

One of the most stressful parts of shipping software is getting all the change, test, and deployment information for an upcoming release into one place. The last thing anyone needs before a release is a long meeting to report on status. This is where release dashboards come in.

Look for tools with a single dashboard integrated with your code repository and deployment tools. Find something that gives you full visibility on branches, builds, pull requests, and deployment warnings in one place.

DevOps tools

The DevOps tool categories include the following:

- Version control: it is a set a set of apps that track any type of changes made in a set of files in the due course of time. It tracks software both manually and automatically. AS compared to early version control system, the modern Version control uses distributed storage using either one master server (subversion) or a web of distributed servers (git or mercurial). Version control systems keep track of dependencies present in the version, for instance, type, brand, and database.

- Building and deployment: It is a set of Tools that automate the building and deployment of software throughout the DevOps process, including continuous development and continuous integration.

- Functional and non-functional testing: It is a set of tools that provide an automated testing in both the functional and nonfunctional aspects of a DevOps. A set of testing tools should provide an integrated unit, check performance updates, and security of the app. The sole motive of these testing is to check the whole automation system.

- Provisioning: Config Management is part of provisioning. Basically, that’s using a tool like Chef, Puppet or Ansible to configure your server. “Provisioning” often implies it’s the first time you do it. Config management usually happens repeatedly. The tools that help in creating provisions podium required for deployment of the software and monitor the functions along with logging any changes that might occur to the configuration of the data or software. It helps in getting the system back in the state of equilibrium.

Must Have DevOps tools

Jenkins: Jenkins a leader in DevOps tool for monitoring and implementation for repeated jobs. It allows DevOps teams to merge changes with ease and access outputs for a quick identification of problems.

Key Features:

- Self-contained Java-based program ready to run out of the box with Windows, Mac OS X, and other Unix-like operating systems

- Continuous integration and continuous delivery

- Easily set up and configured via a web interface

- Hundreds of plugins in the Update Center

Vagrant: Vagrant is another tool to help your organization transition to a DevOps culture. Vagrant also helps improve your entire workflow of using Puppet, improving development and process for both developers and operations

Key Features:

- No complicated setup process; simply download and install within minutes on Mac OS X, Windows, or a popular distribution of Linux

- Create a single file for projects describing the type of machine you want, the software you want to install, and how you want to access the machine, and then store the file with your project code

- Use a single command, vagrant up, and watch as Vagrant puts together your complete development environment so that DevOps team members have identical development environments

Monit: It is a simple watchdog tool that ensures that the given process is running appropriately on the software. It is easy to setup and configure for multiservice architecture

Key Features:

- Small open source utility for managing and monitoring Unix systems

- Conducts automatic maintenance and repair

- Executes meaningful causal actions in error situations

PagerDuty: A DevOps tool that helps in protection of brand reputation and customer experiences by gaining visibility into analytical systems and applications. It quickly detects and resolves incidents thereby delivering high performing apps and excellent customer experience.

Key Features:

- Real-time alerts

- Gain visibility into critical systems and applications

- Quickly detect, triage, and resolve incidents from development through production

- Full-stack visibility across dev and production environments

- Event intelligence for actionable insights

Prometheus: Prometheus is a popular DevOps with teams that use Grafana as the framework. It is an open-source service monitoring system with flexible query language for slicing time series data for the generation of alerts, tables and graphs. It supports more than 10 languages and easy to execute custom libraries.

Key Features:

- Flexible query language for slicing and dicing collected time series data to generate graphs, tables, and alerts

- Stores time series in memory and on local disk with scaling achieved by functional sharing and federation

- Supports more than 10 languages and includes easy-to-implement custom libraries

- Alerts based on Prometheus’s flexible query language

- Alert manager handles notifications and silencing

SolarWinds: It is a DevOps that offers real time correlation and remediation. Their Log & Event Manager software offers best troubleshooting, security solution and fixes, as well as data compliance.

Key Features:

- Normalize logs to quickly identify security incidents and simplify troubleshooting

- Out-Of-The-Box rules and reports for easily meeting industry compliance requirements

- Node-based licensing

- Real-time event correlation

- Real-time remediation

- File integrity monitoring

- Licenses for 30 nodes to 2,500 nodes

Conclusion:

No wonder, selecting the right tools for DevOps is a difficult task along with the complexity of the new tools that are relatively new for most of the development shops. However, if one follows guidelines and Checklist, one should be easily able to sail through the DevOps creating a foolproof system. To learn more about DevOps best practices, check out our other posts on why DevOps can have a huge impact on the efficiency of your SDLC or contact us on

info@anarsolutions.com

http://www.anarsolutions.com/how-to-choose-the-right-devops-tools/utm-source=Blogger.com