How to select Data Architecture for your application?

How to select Data Architecture for your application?

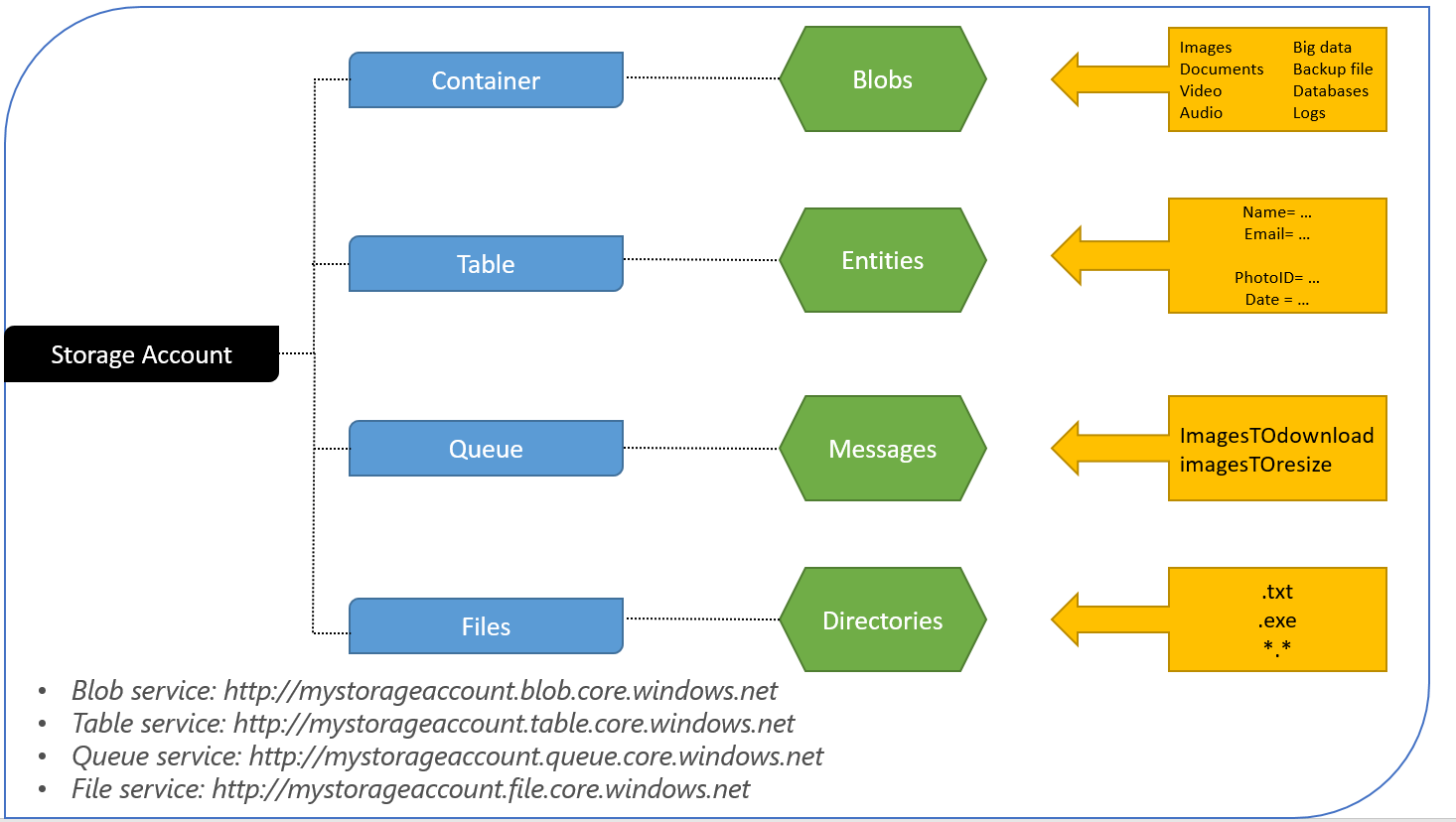

The era of Cognitive computing is here and is taking knowledge to next level to bring about a radical change in the software industry. Shaping cognitive expertise into a lot of the data processing solutions and human-designed systems on earth with a type of thinking skill has become imaginable with this paradigm shift in the era of computing. On the other hand, planning data-centric solutions in the cloud can be little difficult. Not only do we have the normal task of meeting client necessities, but we also have to deal with an ever changing data setting of implements, prototypes and podiums. Data architecture by Azure offers a well-thought-out line of attack for designing data-centric resolutions on Microsoft Azure. It is centred on confirmed practices resulting from client arrangements.

Significant Changes are noticeable with the advent of cloud

From data processing to its storage, the cloud is altering the way applications are designed. Gone are the days of a solo database looking completely after a solution’s data. Now a days multiple, multilingual solutions are used with each one offering precise skills. Applications are designed around a data pipeline.

Concerns while going for Data Center Architecture for your application

While bearing in mind data center networking architecture software engineers must strike equilibrium between consistency, performance, swiftness, scalability and budget. In addition, to enhance data center savings, the architecture must also be able to support both present and upcoming applications. As a result, what should software engineers think through?

Let us delve into significant decision aspects while opting for data center architecture:

It is important to evaluate each case individually. Understanding of each unique The size of the data center, anticipated growth and whether it’s a new system or an upgrade of an old legacy system will all impact architecture choice; for best performance, understanding each unique condition is essential to ensuring the right cabling infrastructure layout is chosen. Think through diverse architecture arrangements, diverse layouts suit unlike circumstances, in addition to presenting different outcomes. What is more, contemplate on equipment assembly ways and means.

The data solutions explained comprehensively

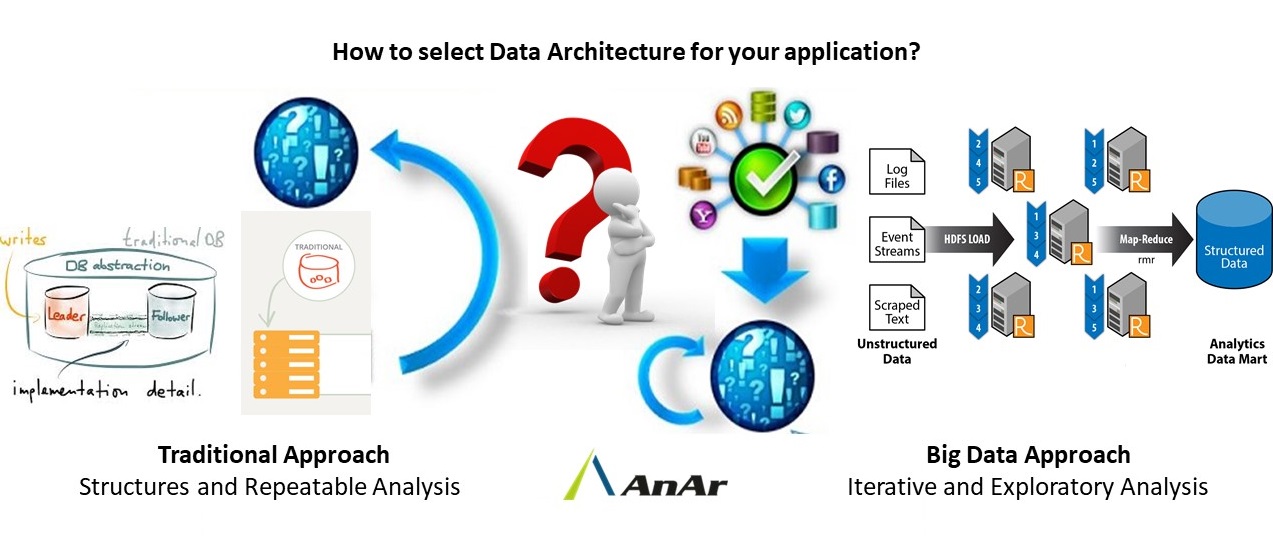

On a day to day basis bulks of data get generated at ever-accelerating degrees. Consequently, big data analytics has turn out to be a great tool for companies eyeing to control mounts of valued data for revenue and economical gain. In the middle of this big data rush, two types of data solutions – Traditional RDBMS workloads and big data solutions- have been profoundly endorsed as the one-size fits everything answer for the industry domain’s big data difficulties.

Let us deep-dive into the nuances of the design patterns of both and the finest practices, challenges and procedures for designing these types of way outs.

Traditional relational database solutions

A relational database is a set of properly defined counters from which data can be logged on or get back together in a lot of diverse methods minus having to restructure the database tables. The normal worker and application programming interface (API) of a relational database is the Structured Query Language also known as SQL. SQL statements are used equally for communicating demands for data from a relational database and for collecting data for information.

But, what is there in a relational database prototypical?

Standard relational databases allow operators to be able to predefine data relationships through numerous databases. For each table, this is at times termed a relation, also termed as attributes. Each row comprises of an exclusive case of data. A sole primary key is there in each table, which recognizes the info in a table.

Advantages of relational databases

There are a lot of pluses of relational databases. Let’s discuss them in detail.

- The biggest plus of relational databases is that they allow consumers to classify and accumulate data with no trouble. And, this data can later be enquired and filtered to extract particular info for reports. They also can easily be extended. As soon as the new database gets created, fresh category of data can be added devoid of all prevailing applications being changed.

- It is accurate, as data gets deposited just once, which eradicates the duplication of data.

- It is flexible, as difficult requests are stress-free for consumers to accomplish.

- Collectively, a lot of consumers can access the identical database.

- It is reliable as relational database prototypes are well-understood.

- It is safe and sound as data in tables in relational database management structures can be restricted to let access by lone specific consumers.

These are some of the advantages which these systems have to offer. On the other hand, despite the fact they are extensively used, relational databases have some downsides too. The main disadvantage of relational databases is that they are too pricey in arrangement and keeping up the database system. With the intention of arrangement of a relational database, you need to obtain different software. As abundant information is available, developments in the difficulty of data cause another downside to relational databases. Case in point, relational databases are prepared for bringing together data by joint features. Also, as some relational databases have restrictions on field sizes leading to data loss. This is because while designing the database, the amount of data needs to be specified. Sometimes in difficult relational database systems, info cannot be shared effortlessly from one big system to a new one.

Big Data architectures

Big data architectures are used wherein there is too large or complex data for traditional database systems to handle. It is planned to have a grip on the breakdown, processing, and exploration of huge data. A lot many firms are entering into the big data world. However, the starting point at which firms are entering is completely different, as it is influenced by the abilities of the consumers and their tools. For this very reason, the tools used for working with big data sets are getting updated day by day. This has made things easier than ever before as large data can be extracted now from your data sets through innovative analytics.

The data setting has changed from the past few years. Data is increasing day by day and when it arrives at a quicker pace, it demands to be collected and witnessed. On the other hand, some data arrives at a slow pace, but in huge amounts. Then at this point of time, this data requires machine learning. At this juncture, big data comes into play as these are tasks that big data architectures strive for to answer. A lot of workload is needed in seeking these tasks.

When the big data architectures need to be contemplated?

Contemplate big data architectures when the necessities listed below arise:

- When there is a large amount of data which needs to be stored and processed.

- When you need to alter free data for exploration.

- When you need to process and evaluate abundant chunks of data in real time.

In each type, the main choice norms along with a skilled medium have been described to make things easier. Based on the facts described you can decide on the precise technology for your set-up. On the other hand, in definite circumstances where running workloads on a traditional database may be the better solution.

Conclusion

This write-up is by no means a deep-dive in the nuances of common classifications of data solution. This is to provide decent, comprehensive content in this theme, letting us to know and escalate the essential ideas in picking up the precise data architecture or data channel for your setup. You just need to dive into detailed focus extents as needed for deciding on the Azure services and technologies that are an apt fit for your requests. In case, if you have already zeroed on the architecture, then hopping straight to the technology pick seems the right thing.

http://www.anarsolutions.com/selecting-data-architecture/utm-source=Blogger